Auger, James Henry. “Living With Robots: A Speculative Design Approach.” Journal of Human-Robot Interaction, vol. 3, no. 1, 28 Feb. 2014, pp. 20–42., doi:10.5898/jhri.3.1.auger.

Ball, Linden J., and Thomas C. Ormerod. “Putting Ethnography to Work: the Case for a Cognitive Ethnography of Design.” International Journal of Human-Computer Studies, vol. 53, no. 1, 2000, pp. 147–168., doi:10.1006/ijhc.2000.0372.

Boshernitsan, Marat, and Michael Sean Downes. Visual programming languages: A survey. Computer Science Division, University of California, 2004.

This report surveys the history and theory behind the development of various visual programming languages, as well as different classifications. They include 1. Purely visual languages, 2. Hybrid text and visual systems, 3. Programming-by-example systems, 4. Constraint-oriented systems, and 5. Form-based systems. Languages can be placed in more than one category. In Purely visual languages, programmers manipulate pictographic icons. In Hybrid text and visual systems, programs are first created visually, then translated into text. In Programming-by-example systems, the user trains, or ‘teaches’ an agent to perform a task. In Constraint-oriented systems, a programmer can model objects in the programming world as physical objects, constrained to mimic real-world behaviour, such as gravity. In Form-based systems, such as Microsoft Excel, the programmer uses the logic linking cells together to analyze data, with the cell states progressing over time. These categories inform the way that information is organized in SpatialFlow at a technical level. For example, through using the node-based system, users can create a simple program visually, with some text and some symbols being used. However, the underlying system in SpatialFlow’s VR prototype is written in the C# programming language using the Unity game engine, and must be a combination of a hybrid text and visual system. The other categories of visual languages help to identify other ways in which a spatial computing programming environment can exist for various tasks. For example, there could be a potential section in SpatialFlow focused around spreadsheet manipulation, which can be conceived of as an augmented form of Microsoft Excel.

Donalek, Ciro, et al. “Immersive and Collaborative Data Visualization Using Virtual Reality Platforms.” 2014 IEEE International Conference on Big Data (Big Data), 2014, pp. 609–614., doi:10.1109/bigdata.2014.7004282.

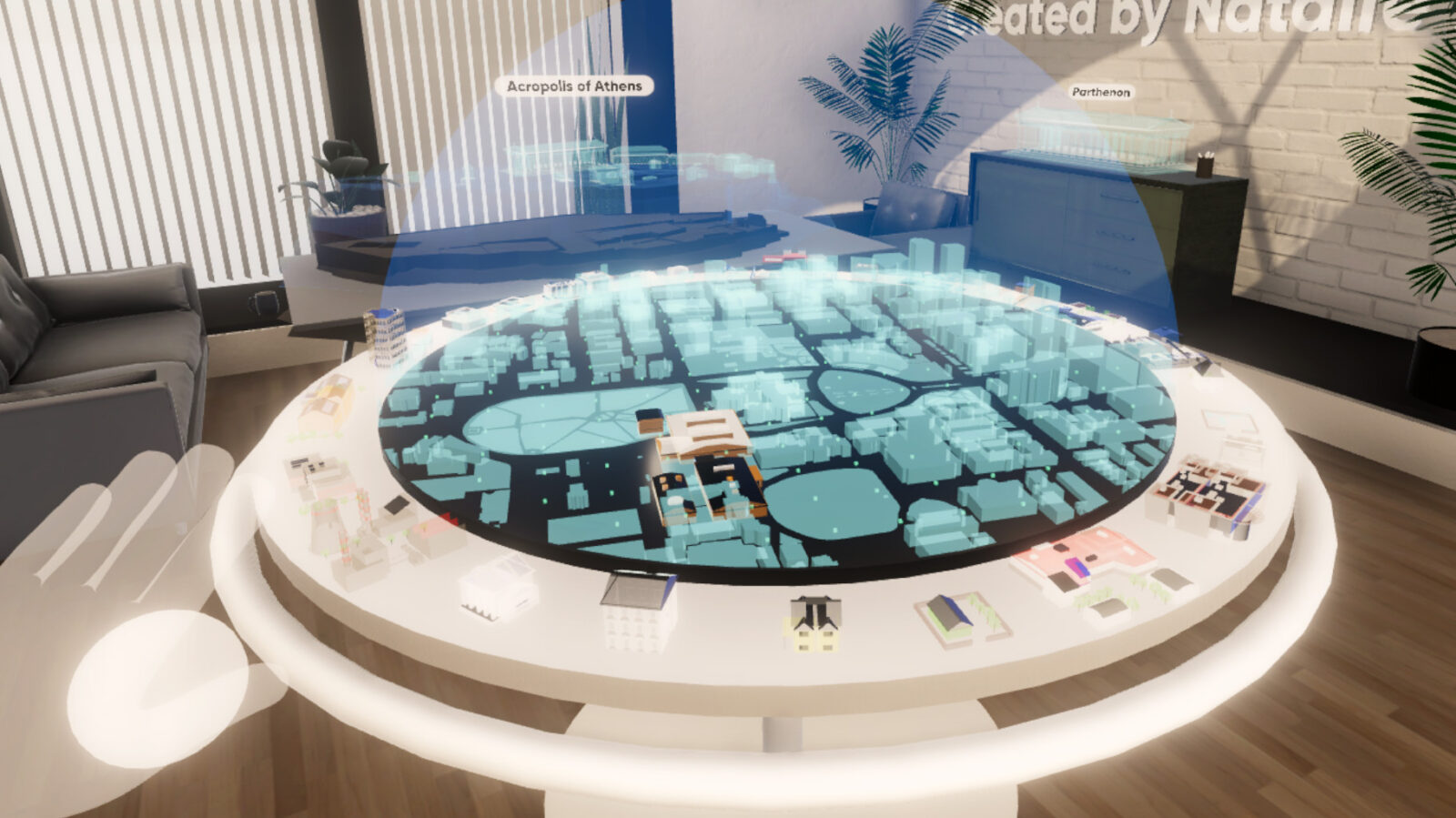

iViz, a project researched and developed by a team of researchers at the California Institute of Technology, is a general-purpose collaborative VR data visualization tool designed and catered for the scientific community. The project allows for a rendering of datasets of large scales, with many dimensions that are difficult to represent only two-dimensionally. In the software, XYZ spatial coordinates, sizes, colours, transparencies and shapes of data points, textures, orientations, rotations, pulsations, etc. are utilized in order to represent a maximum number of dimensions within the particular dataset. In this virtual space, users are rendered as avatars in the same virtual space as the data. As well as each user having their own viewpoint, there is a feature to ‘broadcast’ one view from one user who navigates through the data. It is possible to link data points to web pages in the software. Menus in the program allow users to easily tap into assigned web-pages providing more information on specific points. This interlinking provides a richer experience, combining data with its context, while avoiding excess clutter in the data-view. Insight gained by the iViz research team inspires possible features in SpatialFlown, such as possibilities for users to present their work, and link between different contexts in the same interface. There are plans on making the iViz project publicly available at no cost to the public. The implementation of 3D visualization and telepresence in VR inspires some solutions in SpatialFlow, such as the ability to see others and manipulate data from your field of view. However, iViz is strictly a VR visualization tool, with a basic black-and-white background area. SpatialFlow’s VR background takes on the appearance of a real-life office room or creative workspace, visualizing what a future workspace could look like as holograms in a physical, pre-existing room.

Draskovic, Michael. “Can Spatial Computing Change How We View the Digital World?” Pacific Standard, 26 July 2017, psmag.com/news/can-spatial-computing-change-how-we-view-the-digital-world.

This article defines spatial computing and contextualizes the term by connecting it to real-world uses that are currently being implemented, and others that can be understood and implemented in the near future. Emphasis is placed on the importance of understanding both the technology’s promise and its limitations when it comes to social applications. There are already ways in which AR tools are being used for learning, working, and leisure. The article mentions specific examples and case studies. The viral AR app, Pokemon Go, succeeded in bringing people together, forming a community as they collaborated in the game. ENGAGE Boston, a youth-focused non-profit, began a partnership with the city of Boston, Emerson College, as well as the developers of Pokemon Go, in order to encourage youth to explore and learn about the community’s cultural history. General Electric’s technicians began using AR smart glasses with special software, experiencing a measurable 34 percent improvement in productivity on first used compared to non-AR-enabled technicians. In health care, the SugAR Poke iPhone app published by the Eradicate Childhood Obesity Foundation, combines AR and object-recognition technology to visualize to users how much added sugar exists in food products. With this demonstrated use in health care, spatial computing can nudge user to make healthier and more informed decisions. For blind or visually impaired users, academics at Dartmouth University are developing a Microsoft HoloLens app using pattern and voice recognition to assist those with vision difficulties in identifying and reading indoor signs in order to improve maneuverability in text-heavy environments. Some of these existing spatial computing case studies are valuable socially, as they provide users with enhanced learning tools, relevant data to inform customers, as well as opportunities for community and social involvement. SpatialFlow is a project created to better serve creative practices, making programming flow more open, accessible, and collaborative.

Durbin, Joe, et al. “Real-Time Coding In VR With ‘RiftSketch’ From Altspace Dev.” UploadVR, 12 Feb. 2016, uploadvr.com/riftsketch/.

RiftSketch, created by Brian Peiris, is a virtual reality software application that allows a user to program visualizations inside a virtual reality environment. The software places the user inside a barren virtual world, with a checkerboard pattern outstretched acting as the floor below. In front of the user exists a single curved, virtual screen, used for text-editing. There is no way to point or grab onto any objects with the hands–the programming is done through the real, physical keyboard. By typing out code, the program creates and manipulates objects in the world, live, as the user writes. RiftSketch also allows the user to animate the scene by running through the code every frame, making the scene more active and life-like. The software runs on PCs, web-browsers, and mobile phones. Using three.js, a JavaScript programming library, the user can witness the results of their code appear in front of them, and can view and interact with their creations in a richer way. This breaks the programmer out of the traditional cycle, which involves four distinct phases: edit, compile, link, and run. Instead, the live programming development involves only one theoretical phase, moving the graphical output closer to the user’s speed of thought. RiftSketch offers a compelling visual experience, modifying the way that the creator interacts with the creation, bringing both agents into the same virtual space. One version of the tool allows users to see their keyboard and hands holographically, by setting up a webcam over the keyboard, which is overlaid as a plane in the world. A Leap Motion controller can also be used for some simple gestures, such as incrementing and decrementing numbers by moving the hand up and down. However, it does not attempt to fully augment the user experience of doing the creative programming itself. There is still much room to explore how the experience of programming can be shifted from the normative keyboard-and-text interface itself, such as by implementing more ways to move around and manipulate objects in the environment. It would be interesting to see solutions to tackling programming in VR, other than using the keyboard.

Elliott, Anthony. “Using Virtual Reality to Create Software: A Likely Future.” Medium, Medium, 23 Jan. 2015, medium.com/@anthonyE_vr/using-virtual-reality-to-create-software-a-likely-future-9c4472108289

This article explains the affordances of using Virtual Reality as a tool for creating software, and gives a survey of current options and uses. VR allows users to interact in a digital environment in a more intuitive way, e.g. without needing to learn how to use the mouse and keyboard, which are peripheral objects to the virtual objects that are being manipulated. VR head-mounted displays use stereoscopic rendering, allowing users to perceive distance by engaging the brain’s spatial processing system. Some research has shown that performance can improve due to the enhanced spatial capabilities of VR. Current HMD technology also allows for body movement. The Oculus Rift can detect head position and rotation. The two controllers can detect this data for each hand, and include buttons for more interaction capabilities. The Leap Motion Controller is a small device able to sense the hands and fingers when placed near the keyboard. Another affordance of VR environments is their malleability–they are more easily modified than physical objects. This allows users to perform tasks more efficiently. Finally, developers can begin to utilize VR for programming uses, with new tools in their infancy. RiftSketch allows programmers to live-code 3D scenes in JavaScript using an Oculus Rift. Immersion gives the ability to separate text-based code into fragments, stacking them into piles, and thereby sorting them. These piles can be further expanded into a ring for analysis. This enables spatial reasoning, judging different factors by distance and pile size. Gestures can be used in VR, such as grabbing, to choose and move objects, making tasks more intuitive. VR opens up new possibilities in remote collaboration, as well as pair programming, design, and data visualization. In SpatialFlow, a paramount goal is to make interactions intuitive. Instead of pointing, users are encouraged to ‘grab’ and ‘drag’ visual logic nodes (like lego blocks) and to ‘hit’ buttons as they would in the physical world.

Finch, Sarah. “At A Glance – Spatial Computing.” Disruption Hub, Disruption Hub, 3 May 2018, disruptionhub.com/spatial-computing/.

Gobo, Giampietro, and Lukas T. Marciniak. “Ethnography.” Qualitative research 3.1 (2011): 15-36.

Grenny, Joseph, and David Maxfield. “A Study of 1,100 Employees Found That Remote Workers Feel Shunned and Left Out.” Harvard Business Review, 14 May 2018, hbr.org/2017/11/a-study-of-1100-employees-found-that-remote-workers-feel-shunned-and-left-out

Handy, Alex. “Dynamicland Radically Rethinks the Computer Interface.” The New Stack, 19 Jan. 2018, thenewstack.io/dynamicland-rethinks-computer-interfaces/.

Dynamicland is a spatial computing project and new tech organization located in Oakland, California. They are dedicated to understanding how user interfaces will evolve over thousands of years. Dynamicland transforms a building into a computer–the UI can be a wall, or a table. Paper and sticky notes can be found everywhere. The ceilings are covered in cameras and projectors. Each individual section of paper is its own piece of the Lua programming language. Some printouts have their own code printed on them. The system is capable of optical character recognition (OCR), and has the ability to run it in real-time. When a section is changed, the system can highlight the edited area. Dynamicland’s operating system, dictating how objects relate to each other, is also printed out on paper. In one section of the building exists a drawing program, where users can select colours using a laser pointer directed at a palette of sticky notes. The team further developed Dynamicland to enable work-like behaviour. One example is the use of paper representing datasets, and moving them together to the graph sheet, bringing the data into the graph. Another page uses a stick as a slider to pick the year of information visualized. Multiple users can interact with a program and the code directly, and together in the same space. Dynamicland as a creative space reflects the vision of SpatialFlow, creating an environment encouraging collaboration and playful experimentation, as well as movement through the space. The concept of using physical objects differs from that of SpatialFlow, where the user currently only manipulates virtual objects in the room. Dynamicland’s displays are rather flat, as they are based on sheets of paper, while SpatialFlow’s visualizations are three-dimensional for the most part. It will be interesting to see how the physical and the virtual can be best combined for ease of use.

Harris, Sam. “The Benefits and Pitfalls of Pair Programming in the Workplace.” FreeCodeCamp.org, FreeCodeCamp.org, 22 Aug. 2017, www.freecodecamp.org/news/the-benefits-and-pitfalls-of-pair-programming-in-the-workplace-e68c3ed3c81f/.

MacKenzie, I. Scott. Human-Computer Interaction: an Empirical Research Perspective. Morgan Kaufmann, 2013.

Metcalfe, Tom. “Futuristic ‘Hologram’ Tech Promises Ultra-Realistic Human Telepresence.” NBCNews.com, NBCUniversal News Group, 7 May 2018, www.nbcnews.com/mach/science/futuristic-hologram-tech-promises-ultra-realistic-human-telepresence-ncna871526.

This article discusses a newly-developed 3D display system called TeleHuman 2,created by Canadian researchers at Queen’s University. The device is able to transmit a true-size, 360 degree virtual image of a person that can be viewed without external physical devices such as headsets or mirrors. The projected 3D image looks real from all angles. With this display, the person appears to be standing inside the large cylinder. The system uses three stereoscopic cameras, recording data about the shape of the person, and what they look like. It takes live video from the front, back, and side angles in order to reconstruct the 3D shape, known as a lightfield. Then, this lightfield is transmitted over a network or internet connection to the cylinder screen. This screen is constructed out of a reflective material, and uses an external hoop of over 40 projectors to achieve the effect. It uses approximately six times the bandwidth of a standard 2D video call. This futuristic technology opens up new avenues for collaboration, making some form of telepresence a reality. Researchers have been attempting, for decades, to mimic holographic images seen in popular sci-fi movies, such as Star Wars. It has been difficult to create 3D projections in thin air, as they currently need to be projected onto a surface to be viewed properly. This project’s work in telepresence has some affordances for spatial computing. However, it does have some direct limits. TeleHuman 2 could be effective for standard conference calls and meetings, where collaborators may be sitting down or rather stationary. However, it would become troublesome when more movement of the body is required. For example, in SpatialFlow, walking and outstretching the arms is necessary in order to take advantage of all the program’s features. Users should feel free to move around the room, interacting with different projected objects.

Nagy, Danil. “Computational Design in Grasshopper.” Medium, Generative Design, 6 Feb. 2017, medium.com/generative-design/computational-design-in-grasshopper-1a0b62963690.

“Remote Material Nodes.” Unreal Engine Forums, 3 Mar. 2015, forums.unrealengine.com/unreal-engine/feedback-for-epic/31850-remote-material-nodes.

Repenning, Alexander, et al. “Beyond Minecraft: Facilitating Computational Thinking through Modeling and Programming in 3D.” IEEE Computer Graphics and Applications, vol. 34, no. 3, 12 May 2014, pp. 68–71., doi:10.1109/mcg.2014.46.

This paper published by Colorado Boulder acknowledges the interests of children in 3D design environments, such as in the video game Minecraft. It claims that representing objects and behaviour in 3D environments is an activity which involves both programming and design, and that children are focused on modeling the virtual world, and see the programming aspect as a part of the design process rather than an arbitrary skillset. The researchers have integrated over 12,000 students and 200 teachers to 2D and 3D programming environments to create games and simulations. Some comparisons are made between programming in these two distinct dimensions. The paper outlines three significant aspects in motivating students to learn programming: ownership, spatial thinking, and syntonicity. It is helpful for students to feel that their creations are their own. For example, having students first draw objects that they can use as characters in their worlds. The creation of simple 3D objects can be accessible to a larger audience by having users pick shapes from a palette, such as various cubes in Minecraft, or by taking a paint-then-model approach. This is done by allowing students to sketch a 2D object, then ‘inflate’ it at various points to add elevation, creating a simple 3D model of their own. Compared to creating in 2D, assembling 3D objects in 3D worlds places heavier demands on students’ reasoning and spatial thinking skills. It is important to understand the control of cameras, stacking and positions of objects, and how to use layers to create more complex worlds. The final concept comes from Seymour Papert’s speculation that “humans’ ability to project themselves onto objects–essentially becoming the objects–would help them overcome otherwise difficult programming challenges” (Repenning et al. 70). Papert later developed a robotic turtle, which students could program using instructions that made it move and turn. Later, this was developed into a virtual system, where the student could take on the frog’s perspective through computer graphics. Within 3D worlds, it becomes possible to map the psychological perspective of turning into an object onto an interface that puts this into a first-person perspective, possibly making some concepts easier to understand. This could make VR especially valuable, and spatial computing may become more limited in this regard. However, using a head-mounted display capable of covering the entire view could potentially combine both the affordances of AR and VR in 3D programming by making both options available to users.

Victor, Bret. “Research Agenda and Former Floor Plan.” Communications Design Group SF, 10 Mar. 2014.